Ubuntu22.04部署openstack-2024.01

Ubuntu 22.04 部署 openstack-2024.01

1.参考文档:官方文档

2.如果不指定节点的配置操作,默认仅为控制节点配置。

1.环境说明

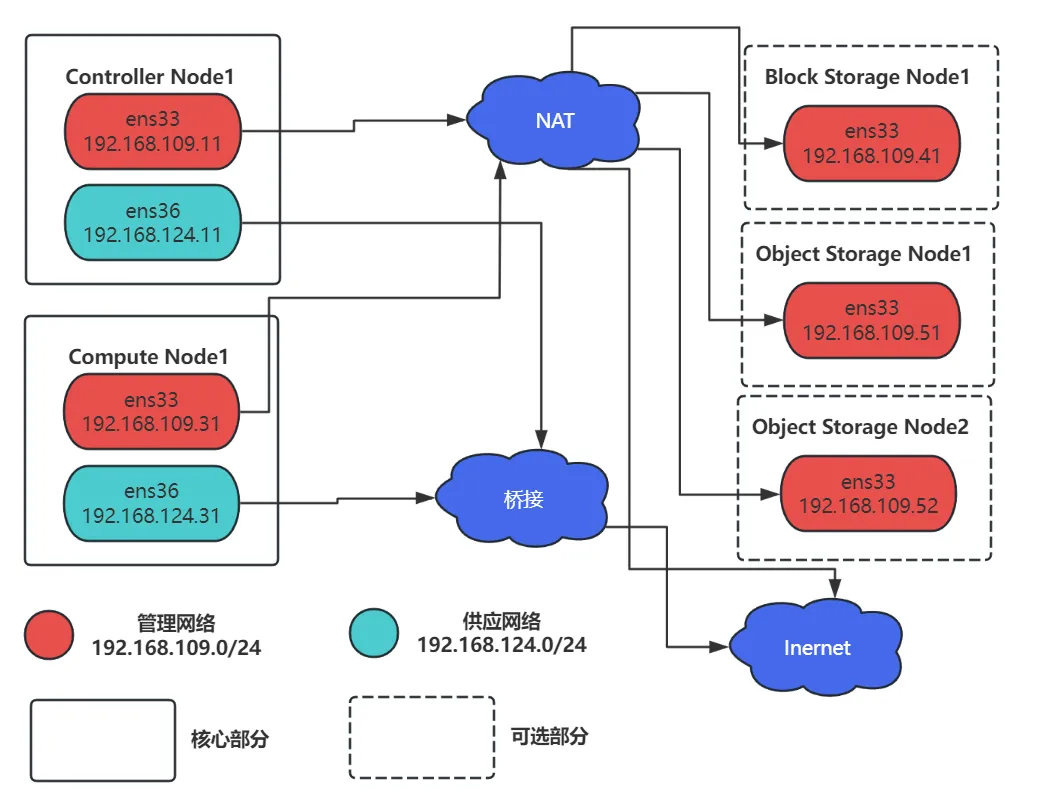

1.1 架构说明

单控制、计算节点架构图:

在真实环境中,管理网络为内部管理网络,供应网络为互联网。

1.2 主机信息

主机信息:

| 主机名 | ens33网卡(NAT) | ens36网卡(桥接) | 配置 | 说明 |

|---|---|---|---|---|

| controller | 192.168.109.11/24 192.168.109.2 | 192.168.124.11/24 192.168.124.1 | 6C 8G | 控制节点 |

| compute1 | 192.168.109.31 192.168.109.2 | 192.168.124.31/24 192.168.124.1 | 2C 2G | 计算节点 |

主机第二张网卡用来实现实例访问互联网(虚拟机环境网段以桥接后的网段为准),该网段可以不用配置网关。

2.hosts文件配置

所有节点配置 hosts 文件解析:

cat << EOF >> /etc/hosts

192.168.109.11 controller

192.168.109.31 compute1

EOF3.NTP 配置

3.1 controller 节点

controller 节点安装、配置时间同步:

# 安装软件

apt install chrony -y

# 编辑配置文件

cp /etc/chrony/chrony.conf /etc/chrony/chrony.conf.bak

vim /etc/chrony/chrony.conf

# 添加以下两行,注释掉其他 pool

server time2.aliyun.com iburst

allow 192.168.109.0/24

# 重启服务

systemctl restart chrony && systemctl enable chrony

# 验证服务

chronyc sources -v3.2 其他节点

其他节点安装、配置时间同步:

# 安装软件

apt install chrony -y

# 编辑配置文件

cp /etc/chrony/chrony.conf /etc/chrony/chrony.conf.bak

vim /etc/chrony/chrony.conf

# 添加以下配置,注释掉其他 pool

server controller iburst

# 重启服务

systemctl restart chrony && systemctl enable chrony

# 验证服务

chronyc sources -v4.添加软件仓库源

所有节点添加 OpenStack 软件仓库( 2024.1 版本)并安装客户端软件:

add-apt-repository cloud-archive:caracal

apt install python3-openstackclient -y5.安装数据库

安装并配置数据库软件:

# 安装软件

apt install mariadb-server python3-pymysql -y

# 修改配置文件

cat << EOF > /etc/mysql/mariadb.conf.d/99-openstack.cnf

[mysqld]

bind-address = 192.168.109.11

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

EOF重启数据库并设置开机自启:

systemctl restart mysql && systemctl enable mysql初始化数据库:

mysql_secure_installation

# 以下为交互内容

Enter current password for root (enter for none): # 直接回车

Switch to unix_socket authentication [Y/n] # n

Change the root password? [Y/n] # n

Remove anonymous users? [Y/n] # y

Disallow root login remotely? [Y/n] # y

Remove test database and access to it? [Y/n] # y

Reload privilege tables now? [Y/n] # y6.消息队列配置

安装 rabbitmq 软件:

apt install rabbitmq-server -y 添加用户 openstack 用户:

rabbitmqctl add_user openstack RABBIT_PASS设置 openstack 用户权限,允许进行配置、写入和读取访问:

rabbitmqctl set_permissions openstack ".*" ".*" ".*"7.Memcached 配置

安装并配置 memcached 软件:

# 安装软件

apt install memcached python3-memcache -y

# 修改配置文件

sed -i "s/\-l 127.0.0.1/\-l 192.168.109.11/g" /etc/memcached.conf

grep '\-l' /etc/memcached.conf重启服务并设置开机自启:

systemctl restart memcached && systemctl enable memcached

systemctl status memcached8.keystone 组件部署

8.1 安装和配置

创建数据库并授权:

mysql

CREATE DATABASE keystone;

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY 'KEYSTONE_DBPASS';

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'KEYSTONE_DBPASS';

quit安装 keystone 软件并修改配置文件:

# 1.安装软件

apt install keystone -y

# 2.修改配置文件

cp /etc/keystone/keystone.conf /etc/keystone/keystone.conf.bak

grep -Ev '^$|#' /etc/keystone/keystone.conf.bak > /etc/keystone/keystone.conf

vim /etc/keystone/keystone.conf

# 配置数据库访问(同时删除默认的connection)

[database]

connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone

# 配置 Fernet 令牌提供程序

[token]

provider = fernet给 keystone 数据库填充数据:

# 填充数据

su -s /bin/sh -c "keystone-manage db_sync" keystone

# 查看是否有表格

mysql keystone -e 'show tables;'初始化 Fernet 密钥存储库:

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone引导 Identity 服务:

keystone-manage bootstrap --bootstrap-password ADMIN_PASS \

--bootstrap-admin-url http://controller:5000/v3/ \

--bootstrap-internal-url http://controller:5000/v3/ \

--bootstrap-public-url http://controller:5000/v3/ \

--bootstrap-region-id RegionOne

8.2 配置 Apache 服务

修改 Apache 配置文件:

cat << EOF > /etc/apache2/apache2.confServerName

ServerName controller

EOF重启 Apache 服务并设置开机自启:

systemctl restart apache2.service && systemctl enable apache2.service8.3 配置环境变量

配置 admin 用户的环境变量:

cat << EOF >> /etc/profile

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=ADMIN_PASS

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

EOF

source /etc/profile重连服务器后测试:

openstack token issue8.4 验证操作

取消环境变量 OS_AUTH_URL 和 OS_PASSWORD 环境变量:

unset OS_AUTH_URL OS_PASSWORD使用 admin 用户,请求身份验证令牌:

root@controller:~# openstack --os-auth-url http://controller:5000/v3 --os-project-domain-name Default --os-user-domain-name Default --os-project-name admin --os-username admin token issue

# 以下为交互和输出信息

Password: # 输入前面设置的密码 ADMIN_PASS

+------------+--------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------+--------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2025-06-26T11:28:20+0000 |

| id | gAAAAABoXSDEpyTcYlpNN8DHXb0A_OYE1wQO4_jdqT7AHBm9bl5hUOgDH5JZ1HIhT2k-eR7fb1vnPyG56Ik9grSNBHZZ0VwkXBkoO9YJtLnEUe6- |

| | n5MOUVx_DlRnPdy3D9e_CkruN1mPHM5_99Sluyx8cjF9aatikZPj_q-BzUxtXtjxpB9s7Ws |

| project_id | 4893e6d75a2540b8918f7db756da3c03 |

| user_id | 306177c6419b4488a25a6f51359032b7 |

+------------+--------------------------------------------------------------------------------------------------------------------------------------+9.glance 组件部署

9.1 基础配置

创建数据库并授权:

mysql

CREATE DATABASE glance;

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY 'GLANCE_DBPASS';

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'GLANCE_DBPASS';

quit创建 glance 用户:

openstack user create --domain default --password GLANCE_PASS glance将角色和用户、项目绑定:

openstack role add --project service --user glance admin创建服务实体:

openstack service create --name glance --description "OpenStack Image" image创建图像服务 API 终端节点:

openstack endpoint create --region RegionOne image public http://controller:9292

openstack endpoint create --region RegionOne image internal http://controller:9292

openstack endpoint create --region RegionOne image admin http://controller:9292配置镜像的注册配额限制(可选):

openstack --os-cloud devstack-system-admin registered limit create \

--service glance --default-limit 1000 --region RegionOne image_size_total

openstack --os-cloud devstack-system-admin registered limit create \

--service glance --default-limit 1000 --region RegionOne image_stage_total

openstack --os-cloud devstack-system-admin registered limit create \

--service glance --default-limit 100 --region RegionOne image_count_total

openstack --os-cloud devstack-system-admin registered limit create \

--service glance --default-limit 100 --region RegionOne \

image_count_uploading如果配置了注册配额限制,则后面的 /etc/glance/glance-api.conf 配置文件需要设置 use_keystone_limits=True 选项及参数。

9.2 安装和配置 glance

安装 glance 软件并配置:

# 安装软件

apt install glance -y

# 修改配置文件

cp /etc/glance/glance-api.conf /etc/glance/glance-api.conf.bak

grep -Ev '^$|#' /etc/glance/glance-api.conf.bak > /etc/glance/glance-api.conf

vim /etc/glance/glance-api.conf

# 配置数据库访问(要删除其他连接)

[database]

connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

#配置Identity Service访问

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = GLANCE_PASS

[paste_deploy]

flavor = keystone

# 配置本地文件系统存储和图像文件的位置

[DEFAULT]

enabled_backends=fs:file

[glance_store]

default_backend = fs

# 在[glance_store]下新增[fs]块

[fs]

filesystem_store_datadir = /var/lib/glance/images/

#配置对keystone的访问

[oslo_limit]

auth_url = http://controller:5000

auth_type = password

user_domain_id = default

username = glance

system_scope = all

password = GLANCE_PASS

endpoint_id = 340be3625e9b4239a6415d034e98aace

region_name = RegionOne配置 glance 用户权限,确保该用户具有对系统范围资源(如限制)的读取访问权限:

openstack role add --user glance --user-domain Default --system all reader填充 glance 数据库数据:

su -s /bin/sh -c "glance-manage db_sync" glance

mysql glance -e 'show tables;'重启 glance-api 服务并设置开机自启:

systemctl restart glance-api && systemctl enable glance-api9.3 验证操作

下载测试镜像到 controller 节点:

wget http://download.cirros-cloud.net/0.4.0/cirros-0.4.0-x86_64-disk.img如果下载速度慢可以在网速好的环境下载好后再上传至服务器。

上传镜像至 glance 仓库:

glance image-create --name "cirros" \

--file cirros-0.4.0-x86_64-disk.img \

--disk-format qcow2 --container-format bare \

--visibility=public

查看 glance 仓库镜像:

root@controller:~# glance image-list

# 输出内容

+--------------------------------------+--------+

| ID | Name |

+--------------------------------------+--------+

| eb2d2c9d-de2f-4d68-a91b-62d033223b6c | cirros |

+--------------------------------------+--------+在 /var/lib/glance/images/ 路径下也会有对应的镜像文件:

root@controller:~# ll -h /var/lib/glance/images/

# 输出内容

total 13M

drwxr-x--- 2 glance glance 4.0K Jun 26 10:53 ./

drwxr-x--- 7 glance glance 4.0K Jun 26 10:52 ../

-rw-r----- 1 glance glance 13M Jun 26 10:53 eb2d2c9d-de2f-4d68-a91b-62d0310.Placement 组件部署

10.1 基础配置

创建 placement 数据库并授权:

mysql

CREATE DATABASE placement;

GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' IDENTIFIED BY 'PLACEMENT_DBPASS';

GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' IDENTIFIED BY 'PLACEMENT_DBPASS';

quit创建 placement 用户:

openstack user create --domain default --password PLACEMENT_PASS placement将角色和用户、项目绑定:

openstack role add --project service --user placement admin创建服务实体:

openstack service create --name placement --description "Placement API" placement创建 Placement API 服务终端节点:

openstack endpoint create --region RegionOne placement public http://controller:8778

openstack endpoint create --region RegionOne placement internal http://controller:8778

openstack endpoint create --region RegionOne placement admin http://controller:877810.2 安装和配置组件

安装 placement-api 软件并配置:

# 安装软件

apt install placement-api -y

# 修改配置文件

cp /etc/placement/placement.conf /etc/placement/placement.conf.bak

grep -Ev '^$|#' /etc/placement/placement.conf.bak > /etc/placement/placement.conf

vim /etc/placement/placement.conf

# 配置数据库访问(删除原有链接)

[placement_database]

connection = mysql+pymysql://placement:PLACEMENT_DBPASS@controller/placement

# 配置 Identity 服务访问

[api]

auth_strategy = keystone

[keystone_authtoken]

auth_url = http://controller:5000/v3

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = placement

password = PLACEMENT_PASS给 placement 数据库填充数据:

su -s /bin/sh -c "placement-manage db sync" placement

mysql placement -e "show tables;"重新加载 Apache 服务器:

systemctl restart apache210.3 验证操作

执行状态检查:

root@controller:~# placement-status upgrade check

# 输出内容

+-------------------------------------------+

| Upgrade Check Results |

+-------------------------------------------+

| Check: Missing Root Provider IDs |

| Result: Success |

| Details: None |

+-------------------------------------------+

| Check: Incomplete Consumers |

| Result: Success |

| Details: None |

+-------------------------------------------+

| Check: Policy File JSON to YAML Migration |

| Result: Success |

| Details: None |

+-------------------------------------------+11.nova 组件部署

11.1 控制节点安装

11.1.1 基础配置

创建 nova_api、nova、nova_cell0 数据库并授权:

mysql

CREATE DATABASE nova_api;

CREATE DATABASE nova;

CREATE DATABASE nova_cell0;

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS';

quit创建 nova 用户:

openstack user create --domain default --password NOVA_PASS nova将角色和用户、项目绑定:

openstack role add --project service --user nova admin创建服务实体:

openstack service create --name nova --description "OpenStack Compute" compute创建 Compute API 服务终端节点:

openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1

openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1

openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1 11.1.2 安装和配置组件

安装软件并配置:

# 安装软件

apt install nova-api nova-conductor nova-novncproxy nova-scheduler -y

# 修改配置文件

cp /etc/nova/nova.conf /etc/nova/nova.conf.bak

grep -Ev '^$|#' /etc/nova/nova.conf.bak > /etc/nova/nova.conf

vim /etc/nova/nova.conf

# 配置数据库访问(删除原有数据库链接)

[api_database]

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api

[database]

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova

# 配置消息队列访问

[DEFAULT]

transport_url = rabbit://openstack:RABBIT_PASS@controller:5672/

# 配置 Identity 服务访问

[api]

auth_strategy = keystone

[keystone_authtoken]

www_authenticate_uri = http://controller:5000/

auth_url = http://controller:5000/

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = NOVA_PASS

# 配置服务用户令牌

[service_user]

send_service_user_token = true

auth_url = http://controller:5000/identity

auth_strategy = keystone

auth_type = password

project_domain_name = Default

project_name = service

user_domain_name = Default

username = nova

password = NOVA_PASS

# 配置选项以使用控制器节点的管理接口 IP 地址

[DEFAULT]

my_ip = 192.168.109.11

# 配置 VNC 代理以使用管理控制器节点的接口 IP 地址

[vnc]

enabled = true

server_listen = $my_ip

server_proxyclient_address = $my_ip

# 配置 Image 服务的位置应用程序接口

[glance]

api_servers = http://controller:9292

# 配置锁定路径

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

# 配置对 Placement (放置) 服务

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = PLACEMENT_PASS填充 nova-api 数据库:

su -s /bin/sh -c "nova-manage api_db sync" nova注册 cell0 数据库:

su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova创建 cell1 单元格:

su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova填充 nova 数据库:

su -s /bin/sh -c "nova-manage db sync" nova验证 cell0 和 cell1 是否已正确注册:

root@controller:~# su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

# 输出内容

+-------+--------------------------------------+------------------------------------------+-------------------------------------------------+----------+

| Name | UUID | Transport URL | Database Connection | Disabled |

+-------+--------------------------------------+------------------------------------------+-------------------------------------------------+----------+

| cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:****@controller/nova_cell0 | False |

| cell1 | bfa96eb1-fc77-4cfd-9edf-35929c062959 | rabbit://openstack:****@controller:5672/ | mysql+pymysql://nova:****@controller/nova | False |

+-------+--------------------------------------+------------------------------------------+-------------------------------------------------+----------+重启服务并设置开机自启:

systemctl restart nova-api nova-scheduler nova-conductor nova-novncproxy && systemctl enable nova-api nova-scheduler nova-conductor nova-novncproxy11.2 计算节点安装

安装 nova-compute 软件并配置:

# 安装软件

apt install nova-compute -y

# 修改配置

cp /etc/nova/nova.conf /etc/nova/nova.conf.bak

grep -Ev '^$|#' /etc/nova/nova.conf.bak > /etc/nova/nova.conf

vim /etc/nova/nova.conf

# 配置消息队列访问

[DEFAULT]

transport_url = rabbit://openstack:RABBIT_PASS@controller

# 配置 Identity 服务访问

[api]

auth_strategy = keystone

[keystone_authtoken]

www_authenticate_uri = http://controller:5000/

auth_url = http://controller:5000/

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = NOVA_PASS

# 配置服务用户令牌

[service_user]

send_service_user_token = true

auth_url = http://controller:5000/identity

auth_strategy = keystone

auth_type = password

project_domain_name = Default

project_name = service

user_domain_name = Default

username = nova

password = NOVA_PASS

# 配置 [DEFAULT]my_ip 为本机管理 IP

[DEFAULT]

my_ip = 192.168.109.31

# 启用和配置远程控制台访问

[vnc]

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html

# 配置 Image 服务的位置应用程序接口

[glance]

api_servers = http://controller:9292

# 配置锁定路径

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

# 配置 Placement API

[placement]

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://controller:5000/v3

username = placement

password = PLACEMENT_PASS重启服务并设置开机自启:

systemctl restart nova-compute && systemctl enable nova-compute

systemctl status nova-compute虚拟化检查和配置:

- 检查虚拟化配置(如果值为 0 则不支持或未开启,如果未开启则需要开启虚拟化功能,不支持则执行下面的修改虚拟化配置)

egrep -c '(vmx|svm)' /proc/cpuinfo- 修改虚拟化配置

cp /etc/nova/nova-compute.conf /etc/nova/nova-compute.conf.bak

grep -Ev '^$|#' /etc/nova/nova-compute.conf.bak > /etc/nova/nova-compute.conf

vim /etc/nova/nova-compute.conf

# 修改虚拟化类型

[libvirt]

virt_type = qemu11.3 将计算节点添加到 cell 数据库

确认数据库中是否有计算主机:

openstack compute service list --service nova-compute发现计算主机:

su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova如果一直添加不上主机,可以查看日志文件 /var/log/nova/nova-compute.log 进行排错。

按照间隔时间自动发现主机配置:

vim /etc/nova/nova.conf

# 修改自动发现配置

[scheduler]

discover_hosts_in_cells_interval = 30011.4 验证操作

查看计算服务:

root@controller:~# openstack compute service list

# 输出内容

+--------------------------------------+----------------+------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+--------------------------------------+----------------+------------+----------+---------+-------+----------------------------+

| 8c9ef2d3-544e-447b-b42c-7e5b6d52bdcd | nova-conductor | controller | internal | enabled | up | 2025-06-26T11:22:04.000000 |

| 9a1be894-d507-49bd-87be-0ac65b988eba | nova-scheduler | controller | internal | enabled | up | 2025-06-26T11:22:04.000000 |

| 5fc5c4eb-f0e4-4106-89a8-09cbfd3bc007 | nova-compute | compute1 | nova | enabled | up | 2025-06-26T11:22:10.000000 |

+--------------------------------------+----------------+------------+----------+---------+-------+----------------------------+列出 Identity 服务中的 API 终端节点:

root@controller:~# openstack catalog list

# 输出内容

+-----------+-----------+-----------------------------------------+

| Name | Type | Endpoints |

+-----------+-----------+-----------------------------------------+

| placement | placement | RegionOne |

| | | public: http://controller:8778 |

| | | RegionOne |

| | | admin: http://controller:8778 |

| | | RegionOne |

| | | internal: http://controller:8778 |

| | | |

| nova | compute | RegionOne |

| | | internal: http://controller:8774/v2.1 |

| | | RegionOne |

| | | admin: http://controller:8774/v2.1 |

| | | RegionOne |

| | | public: http://controller:8774/v2.1 |

| | | |

| glance | image | RegionOne |

| | | public: http://controller:9292 |

| | | RegionOne |

| | | internal: http://controller:9292 |

| | | RegionOne |

| | | admin: http://controller:9292 |

| | | |

| keystone | identity | RegionOne |

| | | internal: http://controller:5000/v3/ |

| | | RegionOne |

| | | admin: http://controller:5000/v3/ |

| | | RegionOne |

| | | public: http://controller:5000/v3/ |

| | | |

+-----------+-----------+-----------------------------------------+查看镜像:

root@controller:~# openstack image list

# 输出内容

+--------------------------------------+--------+--------+

| ID | Name | Status |

+--------------------------------------+--------+--------+

| eb2d2c9d-de2f-4d68-a91b-62d033223b6c | cirros | active |

+--------------------------------------+--------+--------+检查单元格和放置 API 是否成功运行:

root@controller:~# nova-status upgrade check

# 输出内容

+--------------------------------------------------------------------+

| Upgrade Check Results |

+--------------------------------------------------------------------+

| Check: Cells v2 |

| Result: Success |

| Details: None |

+--------------------------------------------------------------------+

| Check: Placement API |

| Result: Success |

| Details: None |

+--------------------------------------------------------------------+

| Check: Cinder API |

| Result: Success |

| Details: None |

+--------------------------------------------------------------------+

| Check: Policy File JSON to YAML Migration |

| Result: Success |

| Details: None |

+--------------------------------------------------------------------+

| Check: Older than N-1 computes |

| Result: Failure |

| Details: Current Nova version does not support computes older than |

| Antelope but the minimum compute service level in your |

| system is 61 and the oldest supported service level is 66. |

+--------------------------------------------------------------------+

| Check: hw_machine_type unset |

| Result: Success |

| Details: None |

+--------------------------------------------------------------------+

| Check: Service User Token Configuration |

| Result: Success |

| Details: None |

+--------------------------------------------------------------------+12.Neutorn 组件部署

12.1 控制节点部署

12.1.1 基本配置

创建 neutron 数据库并授权:

mysql

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'NEUTRON_DBPASS';

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'NEUTRON_DBPASS';

quit创建 neutron 用户:

openstack user create --domain default --password NEUTRON_PASS neutron将角色和用户、项目绑定:

openstack role add --project service --user neutron admin创建服务实体:

openstack service create --name neutron --description "OpenStack Networking" network创建网络服务 API 终端节点:

openstack endpoint create --region RegionOne network public http://controller:9696

openstack endpoint create --region RegionOne network internal http://controller:9696

openstack endpoint create --region RegionOne network admin http://controller:969612.1.2 配置联网选项

选项1:提供商网络部署

安装软件:

apt install -y neutron-server neutron-plugin-ml2 \

neutron-openvswitch-agent neutron-dhcp-agent \

neutron-metadata-agent

配置 neutron 服务:

cp /etc/neutron/neutron.conf /etc/neutron/neutron.conf.bak

grep -Ev '^$|#' /etc/neutron/neutron.conf.bak > /etc/neutron/neutron.conf

vim /etc/neutron/neutron.conf

# 配置数据库访问(删除原有连接)

[database]

connection = mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron

# 启用 Modular Layer 2 (ML2) plug-in 和 disable additional plug-in

[DEFAULT]

core_plugin = ml2

service_plugins =

# 配置消息队列访问

[DEFAULT]

transport_url = rabbit://openstack:RABBIT_PASS@controller

# 配置 Identity Service 访问

[DEFAULT]

auth_strategy = keystone

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = neutron

password = NEUTRON_PASS

# 将 Networking (网络) 配置为 通知 Compute 网络拓扑更改

[DEFAULT]

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

[nova]

auth_url = http://controller:5000

auth_type = password

project_domain_name = Default

user_domain_name = Default

region_name = RegionOne

project_name = service

username = nova

password = NOVA_PASS

# 配置锁定路径

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp配置 ML2 插件:

cp /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugins/ml2/ml2_conf.ini.bak

grep -Ev '^$|#' /etc/neutron/plugins/ml2/ml2_conf.ini.bak > /etc/neutron/plugins/ml2/ml2_conf.ini

vim /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

# 启用平面网络和 VLAN 网络

type_drivers = flat,vlan

# 禁用自助服务网络

tenant_network_types =

# 启用 Linux 桥接机制

mechanism_drivers = openvswitch

# 启用端口安全扩展驱动程序

extension_drivers = port_security

#配置提供程序 virtual 网络作为平面网络

[ml2_type_flat]

flat_networks = provider配置 OpenvSwitch 代理服务:

cp /etc/neutron/plugins/ml2/openvswitch_agent.ini /etc/neutron/plugins/ml2/openvswitch_agent.ini.bak

grep -Ev '^$|#' /etc/neutron/plugins/ml2/openvswitch_agent.ini.bak > /etc/neutron/plugins/ml2/openvswitch_agent.ini

vim /etc/neutron/plugins/ml2/openvswitch_agent.ini

# 将提供商虚拟网络映射到 提供商物理网桥

[ovs]

bridge_mappings = provider:br-provider

# 启用 security groups 和 配置 Open vSwitch 本机或混合 iptables 防火墙驱动程序

[securitygroup]

enable_security_group = true

firewall_driver = openvswitch

#firewall_driver = iptables_hybrid确保创建外部网桥并将相应网卡添加到该网桥:

ovs-vsctl add-br br-provider

ovs-vsctl add-port br-provider ens36配置 DHCP 代理:

cp /etc/neutron/dhcp_agent.ini /etc/neutron/dhcp_agent.ini.bak

grep -Ev '^$|#' /etc/neutron/dhcp_agent.ini.bak > /etc/neutron/dhcp_agent.ini

vim /etc/neutron/dhcp_agent.ini

# 配置 Linux 桥接接口驱动程序, Dnsmasq DHCP 驱动程序,并启用隔离元数据,以便在提供程序上实例 网络可以通过网络访问元数据

[DEFAULT]

interface_driver = openvswitch

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true如果在 /etc/neutron/plugins/ml2/openvswitch_agent.ini 配置文件中开启了 iptables 防火墙驱动程序配置,请增加以下配置:

lsmod |grep br_netfilter

modprobe br_netfilter

cat << EOF >> /etc/sysctl.conf

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

EOF

sysctl -p选项2:自助网络服务

安装软件:

# 配置了网络选项 1 安装命令

apt install neutron-l3-agent -y

# 未配置网络选项 1 安装命令

apt install neutron-server neutron-plugin-ml2 \

neutron-openvswitch-agent neutron-l3-agent neutron-dhcp-agent \

neutron-metadata-agent配置 neutron 服务(如果配置了网络选项 1,仅在原配置基础上新增配置 ):

cp /etc/neutron/neutron.conf /etc/neutron/neutron.conf.bak

grep -Ev '^$|#' /etc/neutron/neutron.conf.bak > /etc/neutron/neutron.conf

vim /etc/neutron/neutron.conf

# 配置数据库访问(删除原有连接)

[database]

connection = mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron

# 启用 Modular Layer 2 (ML2) plug-in 和 disable additional plug-in

[DEFAULT]

core_plugin = ml2

service_plugins = router # 网络选项2新增

# 配置消息队列访问

[DEFAULT]

transport_url = rabbit://openstack:RABBIT_PASS@controller

# 配置 Identity Service 访问

[DEFAULT]

auth_strategy = keystone

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = neutron

password = NEUTRON_PASS

# 将 Networking (网络) 配置为 通知 Compute 网络拓扑更改

[DEFAULT]

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

[nova]

auth_url = http://controller:5000

auth_type = password

project_domain_name = Default

user_domain_name = Default

region_name = RegionOne

project_name = service

username = nova

password = NOVA_PASS

# 配置锁定路径

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp配置 ML2 插件(如果配置了网络选项 1,仅在原配置基础上新增配置 ):

cp /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugins/ml2/ml2_conf.ini.bak

grep -Ev '^$|#' /etc/neutron/plugins/ml2/ml2_conf.ini.bak > /etc/neutron/plugins/ml2/ml2_conf.ini

vim /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

# 启用平面、VLAN 和 VXLAN 网络

type_drivers = flat,vlan,vxlan # 网络选项2新增

# 启用 VXLAN 自助服务网络

tenant_network_types = vxlan # 网络选项2新增

# 启用 Linux 桥接器和第 2 层填充机制

mechanism_drivers = openvswitch,l2population # 网络选项2新增

# 启用端口安全扩展驱动程序

extension_drivers = port_security

#配置提供程序 virtual 网络作为平面网络

[ml2_type_flat]

flat_networks = provider

# 配置自助服务网络的 VXLAN 网络标识符范围(网络选项2新增)

[ml2_type_vxlan]

vni_ranges = 1:1000配置 Open vSwitch 代理(如果配置了网络选项 1,仅在原配置基础上新增配置 ):

cp /etc/neutron/plugins/ml2/openvswitch_agent.ini /etc/neutron/plugins/ml2/openvswitch_agent.ini.bak

grep -Ev '^$|#' /etc/neutron/plugins/ml2/openvswitch_agent.ini.bak > /etc/neutron/plugins/ml2/openvswitch_agent.ini

vim /etc/neutron/plugins/ml2/openvswitch_agent.ini

# 将提供商虚拟网络映射到 提供商物理网桥,并配置处理覆盖网络的物理网络接口的 IP 地址

[ovs]

bridge_mappings = provider:br-provider

local_ip = 192.168.109.11 # 网络选项2新增

# 启用 VXLAN 覆盖网络并启用第 2 层填充(网络选项2新增)

[agent]

tunnel_types = vxlan

l2_population = true

# 启用 security groups 和 配置 Open vSwitch 本机或混合 iptables 防火墙驱动程序

[securitygroup]

enable_security_group = true

firewall_driver = openvswitch

#firewall_driver = iptables_hybrid创建外部网桥并将其添加到该网桥(如果网络选项1创建了便可不用再次创建),命令如下:

ovs-vsctl add-br br-provider

ovs-vsctl add-port br-provider ens36配置三层代理(网络选项2新增配置):

cp /etc/neutron/l3_agent.ini /etc/neutron/l3_agent.ini.bak

grep -Ev '^$|#' /etc/neutron/l3_agent.ini.bak > /etc/neutron/l3_agent.ini

vim /etc/neutron/l3_agent.ini

#配置 Open vSwitch 接口驱动程序

[DEFAULT]

interface_driver = openvswitch配置 DHCP 代理(和网络选项1配置相同,如果配置了网络选项 1 可不用再配置),命令如下:

cp /etc/neutron/dhcp_agent.ini /etc/neutron/dhcp_agent.ini.bak

grep -Ev '^$|#' /etc/neutron/dhcp_agent.ini.bak > /etc/neutron/dhcp_agent.ini

vim /etc/neutron/dhcp_agent.ini

# 配置 Linux 桥接接口驱动程序, Dnsmasq DHCP 驱动程序,并启用隔离元数据,以便在提供程序上实例网络可以通过网络访问元数据

[DEFAULT]

interface_driver = openvswitch

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true12.1.3 配置元数据代理

配置元数据代理:

cp /etc/neutron/metadata_agent.ini /etc/neutron/metadata_agent.ini.bak

grep -Ev '^$|#' /etc/neutron/metadata_agent.ini.bak > /etc/neutron/metadata_agent.ini

vim /etc/neutron/metadata_agent.ini

# 配置元数据主机和共享 秘密

[DEFAULT]

nova_metadata_host = controller

metadata_proxy_shared_secret = METADATA_SECRET12.1.4 配置 Compute 服务

配置 Compute 服务:

vim /etc/nova/nova.conf

# 启用 metadata 代理,并配置 secret

[neutron]

auth_url = http://controller:5000

auth_type = password

project_domain_name = Default

user_domain_name = Default

region_name = RegionOne

project_name = service

username = neutron

password = NEUTRON_PASS

service_metadata_proxy = true

metadata_proxy_shared_secret = METADATA_SECRET12.1.5 填充数据库

给 neutron 数据库填充数据:

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron12.1.5 重启服务

重新启动 Compute API 服务:

systemctl restart nova-api重新启动 neutron 相关服务:

systemctl restart neutron-server neutron-openvswitch-agent neutron-dhcp-agent neutron-metadata-agent && \

systemctl enable neutron-server neutron-openvswitch-agent neutron-dhcp-agent neutron-metadata-agent

systemctl status neutron-server neutron-openvswitch-agent neutron-dhcp-agent neutron-metadata-agent重新启动三层代理服务(如果配置的是联网选项 2 要重启此服务):

systemctl restart neutron-l3-agent && systemctl enable neutron-l3-agent12.2 计算节点部署

12.2.1 软件安装

安装 neutron-openvswitch-agent 软件:

apt install neutron-openvswitch-agent -y12.2.2 配置元数据代理

配置元数据代理:

cp /etc/neutron/metadata_agent.ini /etc/neutron/metadata_agent.ini.bak

grep -Ev '^$|#' /etc/neutron/metadata_agent.ini.bak > /etc/neutron/metadata_agent.ini

vim /etc/neutron/metadata_agent.ini

# 配置元数据主机和共享 秘密

[DEFAULT]

nova_metadata_host = controller

metadata_proxy_shared_secret = METADATA_SECRET12.2.3 配置联网选项

联网选项 1:提供商网络

配置 Open vSwitch 代理:

cp /etc/neutron/plugins/ml2/openvswitch_agent.ini /etc/neutron/plugins/ml2/openvswitch_agent.ini.bak

grep -Ev '^$|#' /etc/neutron/plugins/ml2/openvswitch_agent.ini.bak > /etc/neutron/plugins/ml2/openvswitch_agent.ini

vim /etc/neutron/plugins/ml2/openvswitch_agent.ini

#将提供商虚拟网络映射到 提供商物理网桥

[ovs]

bridge_mappings = provider:br-provider

# 启用 security groups 和 配置 Open vSwitch 本机或混合 iptables 防火墙驱动程序

[securitygroup]

enable_security_group = true

firewall_driver = openvswitch

#firewall_driver = iptables_hybrid创建外部桥并将相应网卡添加到该桥:

ovs-vsctl add-br br-provider

ovs-vsctl add-port br-provider ens36 如果在 /etc/neutron/plugins/ml2/openvswitch_agent.ini 配置文件中开启了 iptables 防火墙驱动程序配置,请增加以下配置:

lsmod |grep br_netfilter

modprobe br_netfilter

cat << EOF >> /etc/sysctl.conf

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

EOF

sysctl -p联网选项 2:自助服务网络

如果已经配置了网络选项 1,则只需要修改或增加相应配置即可。

配置 Open vSwitch 代理(如果配置了网络选项 1,仅在原配置基础上新增配置 ):

cp /etc/neutron/plugins/ml2/openvswitch_agent.ini /etc/neutron/plugins/ml2/openvswitch_agent.ini.bak

grep -Ev '^$|#' /etc/neutron/plugins/ml2/openvswitch_agent.ini.bak > /etc/neutron/plugins/ml2/openvswitch_agent.ini

vim /etc/neutron/plugins/ml2/openvswitch_agent.ini

#将提供商虚拟网络映射到 提供商物理网桥,并配置处理覆盖网络的物理网络接口的 IP 地址

[ovs]

bridge_mappings = provider:br-provider

local_ip = 192.168.109.31 # 联网选项2新增

# 启用 VXLAN 覆盖网络并启用第 2 层填充(联网选项 2新增)

[agent]

tunnel_types = vxlan

l2_population = true

# 启用 security groups 和 配置 Open vSwitch 本机或混合 iptables 防火墙驱动程序

[securitygroup]

enable_security_group = true

firewall_driver = openvswitch

#firewall_driver = iptables_hybrid创建外部桥并将相应网卡添加到该桥(如果网络选项 1 创建了便可不用再次创建):

ovs-vsctl add-br br-provider

ovs-vsctl add-port br-provider ens36 12.2.4 配置Compute服务

配置 Compute 服务:

vim /etc/nova/nova.conf

# 配置访问参数

[neutron]

auth_url = http://controller:5000

auth_type = password

project_domain_name = Default

user_domain_name = Default

region_name = RegionOne

project_name = service

username = neutron

password = NEUTRON_PASS12.2.5 启动服务

重新启动 Compute 服务:

systemctl restart nova-compute重新启动 Linux 桥接代理服务:

systemctl restart neutron-openvswitch-agent && systemctl enable neutron-openvswitch-agent 12.3 验证操作

联网选项 1:提供商网络

查看网络代理信息:

root@controller:~# openstack network agent list

# 输出信息

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

| ID | Agent Type | Host | Availability Zone | Alive | State | Binary |

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

| b2b9d6cf-3190-408a-82b5-cd922da53782 | DHCP agent | controller | nova | :-) | UP | neutron-dhcp-agent |

| c011f960-2202-4a22-809d-344643338109 | Open vSwitch agent | compute1 | None | :-) | UP | neutron-openvswitch-agent |

| e5202985-73ef-4464-8947-90e6a6b3680c | Open vSwitch agent | controller | None | :-) | UP | neutron-openvswitch-agent |

| ffc5f1c2-7aa2-4d57-8e2b-3e780cd8fc12 | Metadata agent | controller | None | :-) | UP | neutron-metadata-agent |

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+输出应指示控制节点上有三个代理,计算节点上仅有一个代理。

联网选项 2:自助服务网络

查看网络代理信息:

root@controller:~# openstack network agent list

# 输出内容

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

| ID | Agent Type | Host | Availability Zone | Alive | State | Binary |

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+

| 064fda9a-d304-45bc-9496-4f533379a6ab | Open vSwitch agent | controller | None | :-) | UP | neutron-openvswitch-agent |

| 2cd3748b-5dbc-45e0-a4e2-3a721ac9435c | Open vSwitch agent | compute1 | None | :-) | UP | neutron-openvswitch-agent |

| 4327a284-ed72-4d8a-8747-3142172fae9f | DHCP agent | controller | nova | :-) | UP | neutron-dhcp-agent |

| 6f6c16c7-c898-4d2f-8a3a-92d184e14ebf | Metadata agent | controller | None | :-) | UP | neutron-metadata-agent |

| f508d9d8-0255-4068-99c3-b14d5b61aa95 | L3 agent | controller | nova | :-) | UP | neutron-l3-agent |

+--------------------------------------+--------------------+------------+-------------------+-------+-------+---------------------------+输出应指示控制节点上有四个代理,计算节点上仅有一个代理。

13.Horizon 组件部署

经过实践发现 controller 节点部署 Horizon 组件不能正常访问,而部署在 compute 节点可以,目前未得知原因。

13.1 安装部署

安装软件包:

apt install openstack-dashboard -y修改 openstack-dashboard 配置:

cp /etc/openstack-dashboard/local_settings.py /root/

vim /etc/openstack-dashboard/local_settings.py

# 配置仪表板以使用 controller节点上的 OpenStack 服务

OPENSTACK_HOST = "controller"

ALLOWED_HOSTS = ['*']

# 配置 session storage 服务

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache', # 修改会出错

'LOCATION': 'controller:11211',

}

}

# 启用 Identity API 版本 3

OPENSTACK_KEYSTONE_URL = "http://%s:5000/identity/v3" % OPENSTACK_HOST

# 以下内容最后一行添加

# 启用对域的支持

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

# 配置 API 版本

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 3,

}

# 配置为您创建的用户的默认域 通过仪表板

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default"

# 配置为 的默认角色

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "member"

# 如果您选择了联网选项 1,请禁用对第 3 层的支持 联网服务

OPENSTACK_NEUTRON_NETWORK = {

'enable_router': False, # 如果配置了联网选项2,修改值为True

'enable_quotas': False,

'enable_ipv6': False,

'enable_distributed_router': False,

'enable_ha_router': False,

'enable_fip_topology_check': False,

}

# (可选)配置时区

TIME_ZONE = "TIME_ZONE"重载 Apache 服务:

systemctl reload apache2.service如果 /etc/apache2/conf-available/openstack-dashboard.conf 配置文件不存在以下配置,请添加:

WSGIApplicationGroup %{GLOBAL}13.2 验证操作

在浏览器浏览器输入以下地址(需做 hosts 解析)进行访问:

http://compute1/horizon14.各组件验证命令汇总

keystone:

openstack --os-auth-url http://controller:5000/v3 --os-project-domain-name Default --os-user-domain-name Default --os-project-name admin --os-username admin token issueglance:

glance image-list

placement:

placement-status upgrade checknova:

openstack compute service list

openstack catalog list

openstack image list

nova-status upgrade checkneutron:

openstack network agent listHorizon:

http://compute1/horizon